How to Scrape Google Local Results

This is an article on how you can scrape local results from Google.

- How Local Data Can Boost Marketing and Business Strategies:

- Advanced Tools for Efficient Local Scraping:

- How to scrape Google Local results

- Conclusion

Local data is gold for market researchers. They can use it to study consumer behavior, preferences, and trends in specific areas. This information can be used to tailor products and services to meet the needs of local communities. So, whether you're a curious consumer, a business owner, or a sharp market researcher, Google Local Results data is a vast array of insights waiting to be uncovered!

How Local Data Can Boost Marketing and Business Strategies:

Local data is like a secret weapon for businesses and marketers. Let's say you own a small bakery in a neighborhood. By scraping Google Local Results, you can see what people are saying about your bakery compared to other nearby bakeries. This feedback can guide you in making improvements and highlighting what sets your bakery apart.

For marketers, having access to local data means they can create highly targeted campaigns. They can send special offers to people in specific areas, advertise events to local communities, and even tailor their messaging to match the interests and preferences of different neighborhoods. This level of personalization can significantly boost the effectiveness of marketing efforts.

1.Python:

Python is a special language that helps us give instructions to computers. It's known for being easy to read and write, making it a great choice for tasks like scraping data.

2.BeautifulSoup and Requests:

Imagine if we could have tools that help us understand and grab information from websites. That's exactly what BeautifulSoup and Requests are! BeautifulSoup helps us make sense of the information on a webpage, while Requests helps us talk to the website and get the data we want. They're like magic tools that make web scraping much easier.

Advanced Tools for Efficient Local Scraping:

1.Scrapy:

Scrapy is like a super organized assistant for web scraping. It helps us gather information from websites in a very neat and efficient way. It's like having a helper who knows exactly where to look and how to grab the data we need. This makes large-scale scraping projects much more manageable.

2.Selenium:

Selenium can click buttons, fill out forms, and interact with web pages in a way that mimics human actions. This is super handy for dealing with websites that have complex interactions or dynamic content. Selenium is like our trusty sidekick in the world of web scraping.

So, when it comes to scraping data from Google Local Results, we have a toolbox filled with Python and these amazing libraries. They work together to make the process efficient, organized, and even handle tricky situations on the web. With these tools, we're well-equipped to gather the information we need!

How to scrape Google Local results

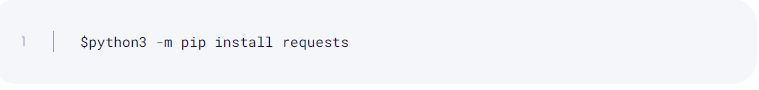

To install this library, use the following command:

If you’re using Windows, choose Python instead of Python3. The rest of the command remains the same:

2. Set up a payload and send a GET request

Create a new file and enter the following code:

query = {

"q": "smart phone",

"hl": "fr",

"lr": "lang_fr",

"cr": "countryFR"

}

# build to url to make request

url = f"https://api.serply.io/v1/search/" + urllib.parse.urlencode(query)

resp = requests.get(url, headers=headers)

results = resp.json()

print(f"Next 100 results: {results}")

# use the proxy location

headers["X-Proxy-Location"] = "FR"

# build to url to make request

url = f"https://api.serply.io/v1/search/" + urllib.parse.urlencode(query)Notice how the url in the payload dictionary is a Google search results page. In this example, the keyword is newton.

As you can see, the query is executed and the page result in HTML is returned in the content key of the response.

Customizing query parameters

Let's review the payload dictionary from the above example for scraping Google search data.

query = {

"q": "smart phone",

"hl": "en",

"num": 100

}

# build to url to make request

url = f"https://api.serply.io/v1/search/" + urllib.parse.urlencode(query)

resp = requests.get(url, headers=headers)

results = resp.json()

print(f"First 100 results: {results}")The dictionary keys are parameters used to inform Google Scraper API about required customization.

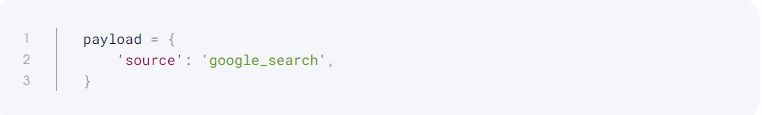

The first parameter is the source, which is really important because it sets the scraper we’re going to use.

The default value is Google – when you use it, you can set the url as any Google search page, and all the other parameters will be extracted from the URL.

Although in this guide we’ll be using the google_search parameter, there are many others – google_ads, google_hotels, google_images, google_suggest, and more. To see the full parameter list, head to our documentation.

Keep in mind that if you set the source as google_search, you cannot use the url parameter. Luckily, you can use several different parameters for acquiring public Google SERP data without having to create multiple URLs. Let’s find out more about these parameters, shall we?

Basic parameters

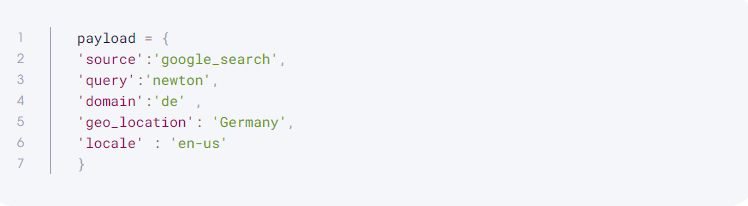

We’ll build the payload by adding the parameters one by one. First, begin with setting the source

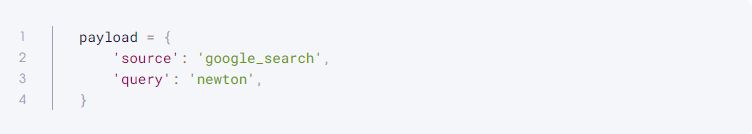

Now, let’s add query – a crucial parameter that determines what search results you’ll be retrieving. In our example, we’ll use newton as our search query. At this stage, the payload dictionary looks like this:

That said, google_search and query are the two essential parameters for scraping public Google search data. If you want the API to return Google search results at this stage, you can use payload. Now, let’s move to the next parameter.

Location query parameters

You can work with a domain parameter if you want to use a localized domain – for example, 'domain':'de' will fetch results from google.de. If you want to see the results from Germany, use the geo_location parameter— 'geo_location':'Germany'. See the documentation for the geo_location parameter to learn more about the correct values.

Also, here’s what changing the locale parameter looks like:

To learn more about the potential values of the locale parameter, check the documentation, as well.

If you send the above payload, you’ll receive search results in American English from google.de, just like anyone physically located in Germany would.

Controlling the number of results

By default, you’ll see the first ten results from the first page. If you want to customize this, you can use these parameters: start_page, pages, and limit.

The start_page parameter determines which page of search results to return. The pages parameter specifies the number of pages. Finally, the limit parameter sets the number of results on each page.

For example, the following set of parameters fetch results from pages 11 and 12 of the search engine results, with 20 results on each page:

query = {

"q": "smart phone",

"hl": "en",

"num": 100,

"start": 200

}

# build to url to make request

url = f"https://api.serply.io/v1/search/" + urllib.parse.urlencode(query)

resp = requests.get(url, headers=headers)

results = resp.json()

print(f"Next 100 results: {results}")Apart from the search parameters we’ve covered so far, there are a few more you can use to fine-tune your results – see our documentation on collecting public Google Search data.

Python code for scraping Google search data

Now, let’s put together everything we’ve learned so far – here’s what the final script with the shoes keyword looks like:

import os

import urllib

import requests

API_KEY = os.environ.get("API_KEY")

# set the api key in headers

headers = {"apikey": API_KEY}

query = {

"q": "smart phone",

"hl": "fr",

"lr": "lang_fr",

"cr": "countryFR"

}

# build to url to make request

url = f"https://api.serply.io/v1/search/" + urllib.parse.urlencode(query)

resp = requests.get(url, headers=headers)

results = resp.json()

print(f"Next 100 results: {results}")3. Export scraped data to a CSV

One of the best Google Scraper API features is the ability to parse an HTML page into JSON. For that, you don't need to use BeautifulSoup or any other library – just send the parse parameter as True.

Here is a sample payload:

When sent to the Google Scraper API, this payload will return the results in JSON. To see a detailed JSON data structure, see our documentation.

The key highlights:

- The results are in the dedicated results list. Here, each page gets a new entry.

- Each result contains the content in a dictionary key named content.

- The actual results are in the results key.

Note that there’s a job_id in the results.

The easiest way to save the data is by using the Pandas library, since it can normalize JSON quite effectively.

import csv

f = csv.writer(open("locals.csv", "w", newline=''))

# Write CSV Header, If you dont need that, remove this line

f.writerow(["title", "description", "link"])

for result in results["results"]:

f.writerow([

result['title'],

result['description'],

result['link']

])Alternatively, you can also take note of the job_id and send a GET request to the following URL, along with your credentials.

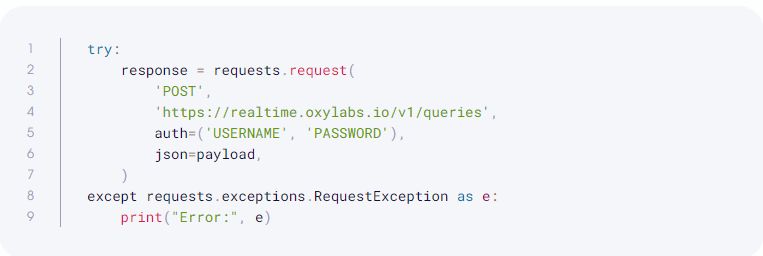

Handling errors and exceptions

When scraping Google, you can run into several challenges: network issues, invalid query parameters, or API quota limitations.

To handle these, you can use try-except blocks in your code. For example, if an error occurs when sending the API request, you can catch the exception and print an error message:

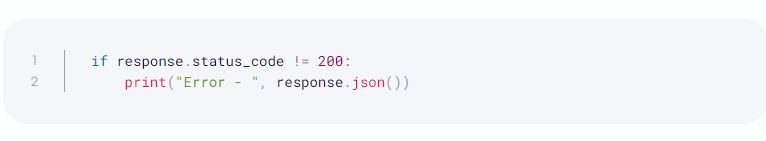

If you send an invalid parameter, Google Scraper API will return the 400 response code.

Conclusion

As we conclude this guide, it's essential to highlight the importance of ethical scraping practices. Respecting the terms of service of websites and APIs, as well as considering the rights and privacy of the data being scraped, is crucial. Additionally, maintaining a balanced approach to scraping that avoids overloading servers and respects anti-scraping measures ensures a positive and sustainable scraping experience for both users and the websites being accessed.